Tutorial Series: How to optimize I/O performance of your deep learning model

Introduction

In the research journey to optimize the performance of your deep learning model, most previous works focused more on training the model or on the model inference. However, most of the time we work with huge datasets, and the process of loading a dataset takes a long time which increases the total training time of the model. Within popular naïve approaches, we used to load batches of datasets every time we needed to train on them, we often end up investing more in storage than what is necessary, which is an additional expense that can be avoided by optimizing your code.

In this article, we will take a deeper look at how naïve approaches use our CPUs and GPUs and how to better sequence the repetitive process of loading data using pipeline optimization techniques built on TensorFlow and PyTorch. At the end of this article, we will present one of the benchmarking tests we conducted on Genesis Cloud instances using an integrated framework called FFCV, and show how its use can contribute to a 52.3% total savings in training cost over a vanilla algorithm on a Genesis Cloud instance and up to 68.3% over an AWS V100 instance.

By the end of this article, you should be able to learn some of the most popular techniques for building an efficient input data pipeline for your use cases, and how these optimizers can help reduce your total training time as well as your resource usage, and thus making the model training less costly.

Naïve approaches to input pipeline:

A study of machine learning model training conducted on several huge datasets showed that data ingestion and preprocessing take up to 65% of the epoch time, showing the importance of optimizing input data pipelines. The process of creating an input data pipeline for machine learning model training can be described in three consecutive steps called the ETL process. The first step is Extraction, where the input data is read from a storage system, which can be from a local data source or a remote one such as cloud storage. The second stage is Transformation, the input data is transformed at this stage to be ready for machine learning training, here the data is shuffled, filtered, and sampled to create batches for training, another popular practice is data augmentation mainly used to increase the amount of data if necessary. The last step is data Loading, which includes cleaning and shaping the data and thus loading it onto the accelerator device for training.

A single training step consumes a batch of input data and then updates the model weights. Training is often run on an accelerating device such as a GPU or TPU for their ability to perform common linear algebra operations in machine learning computations, while the CPU is the one responsible for preprocessing data and therefore feeding it to the accelerators. The combination of these two working together is the main pillar for achieving good performance and avoiding pipeline stalls, and that’s exactly what the naïve approach fails to do.

As shown in the figure below, data processing and model training are done completely separately in a naïve approach. While the CPU fetches the data to prepare it for training, the GPU sits idle, waiting for the data to load. Later, when the GPU starts training, it’s time for the CPU to sit idle. This approach is less efficient at managing our resources because more time is spent waiting rather than on executing tasks.

Source: tensorflow.org

To optimize our performance, reduce our training time, and efficiently manage our resources, we need to explore the techniques on both TensorFlow and PyTorch that attempt to touch the three ETL steps mentioned earlier to achieve an optimal compromise.

Optimization practices in TensorFlow:

If you are working with TensorFlow and want to use your resources efficiently, you need to know about the tf.data API. The tf.data API is responsible for enabling software pipelining and parallel execution of calculations and I/O.

The main class of tf.data is tf.data.Dataset which supports writing efficient input pipelines. In this chapter, we will introduce the main options of tf.data.Dataset that can help achieve the optimization of the input pipeline.

Prefetching:

Earlier in this article, we mentioned that loading data and training models done separately can be time-consuming in one step. The prefetch feature in tf.data.Dataset allows you to overlap data pre-processing and model execution of a training step. To do this, the prefetch function uses a data buffer to decouple the consumer and producer of data, and thus overlap their computation. It is mandatory to know the number of items to prefetch before doing so, a common practice is to set it to tf.data.AUTOTUNE which will instruct tf.data to dynamically allocate the buffer size at runtime.

Source: tensorflow.org

As shown in the figure, after you apply the prefetch function, as the training step is being executed for the first batch, the input pipeline is already reading the data for the second.

Parallelizing:

Data extraction in the real-world setting may require high computational costs due to various data extraction overheads. An Interleave transformation can help avoid that issue by providing a certain degree of parallelism to use for fetching data from input sources, either local or remote.

Source: tensorflow.org

After loading the data, some data processing steps are commonly performed on the CPU cores which can be costly operations as well. For this, a map transformation is introduced to enable the parallelizing of the data transformation. The map transformation provides the num_parallel_cells argument to indicate the degree of parallelism to use for applying the user-defined function to input elements.

Source: tensorflow.org

Note that both interleave and map transformation support the tf.data.AUTOTUNE which can dynamically decide on what level of parallelism to use at runtime and is proven to match the performance of expert hand-tuned input pipelines (see tf.data: A Machine Learning Data Processing Framework). When tf.data.AUTOTUNE is used, TensorFlow uses a heuristic to determine the optimal value for the parallelism parameter. Specifically, TensorFlow monitors the time it takes to process each batch of data, and adjusts the parallelism parameter accordingly to maximize performance. If the model is able to process the data quickly, TensorFlow will increase the parallelism parameter to prefetch more data in the background. If the model is struggling to keep up with the prefetching, TensorFlow will decrease the parallelism parameter to reduce the amount of data that is being prefetched.

Caching:

If you have checked all the figures above, you may have noticed that after applying all the previous transformations, the datasets we’re using are opened before each step, which is the same operation repeated over and over again. The tf.data.Dataset.cache can help buy you the time of file opening by caching your dataset, either in the system memory or local storage, and therefore opening it just once at the start of your training and accessing it as needed. Apart from reducing step time, caching data also helps to improve the performance of big data analysis.

Source: tensorflow.org

As shown here, when caching is used, the opening of data files is performed only once at the first epoch. The next epochs will reuse the cached data with the same order of elements, it is a common practice to use shuffle if you want to randomize the order of the elements at every epoch.

Optimization practices in PyTorch:

In the previous chapter, we explained how you can optimize your data pipeline using tf.data provided by TensorFlow. In this chapter, we will explain alternative features used in PyTorch to mitigate the I/O overheads and optimize the input pipeline.

DataLoader

This section will shed light on the main data loading utility in Pytorch which is the torch.utils.data.DataLoader class. We will go over the most important arguments of the DataLoader and how to configure each one to answer your requirements. The parameters of DataLoader are as follows:

DataLoader(dataset, batch_size=1, shuffle=False, sampler=None, batch_sampler=None, num_workers=0, collate_fn=None, pin_memory=False, drop_last=False, timeout=0, worker_init_fn=None, *, prefetch_factor=2, persistent_workers=False)

After loading your dataset in the dataset parameter, you can shuffle it by setting shuffle to true, then your samples are shuffled and loaded in batches according to the number of batches you set in batch_size. Therefore you can apply similar practices mentioned earlier in the TensorFlow section. First, to train your model on parallel processes, you need to allow multiprocessing by increasing the number of num_workers, the default 0 value indicates that the main process is the one responsible for loading the data. To parallelize your model training and data fetching, you use the data prefetcher in Pytorch and load several batches in advance. All you need to do is set prefetch_factor to the number of batches that you want to prefetch across all workers. Although this approach is supposed to speed up your pipeline, you need to make sure that sacrificing several parallel data loaders doesn’t slow down the CPU-side data loading. If the value of prefetch_factor is set too high, it can cause the data loader to consume more CPU and memory resources, which could potentially slow down the overall performance of the model. In that case, you can try decreasing the value of prefetch_factor to reduce the amount of data that is being prefetched. This will reduce the resource usage of the data loader, which may help to improve the overall performance of the model.

FFCV

Although DataLoader can contribute greatly to the optimization of data input, it carries a great risk of decoding bottleneck by the CPU if there is no careful communication between the processes and especially between the GPU and the CPU, as the complexity of the program increases.

FFCV is a built-in PyTorch-compatible library to speed up the data loading process and thus increase throughput for training models. FFCV helps alleviate data loading bottlenecks that make the loading process time-consuming. It’s easy to implement because it can be introduced in code with just a few lines of code and thus swap the DataLoader structure we mentioned earlier into a faster and more optional pipeline. FFCV can automatically handle pre-fetching, caching, and forwarding between CPU and GPU, which can reduce human error and utilize the most optimized features. Additionally, with FFCV, users can efficiently interleave multiple models on the same GPU, as it uses fully asynchronous thread-based data loading.

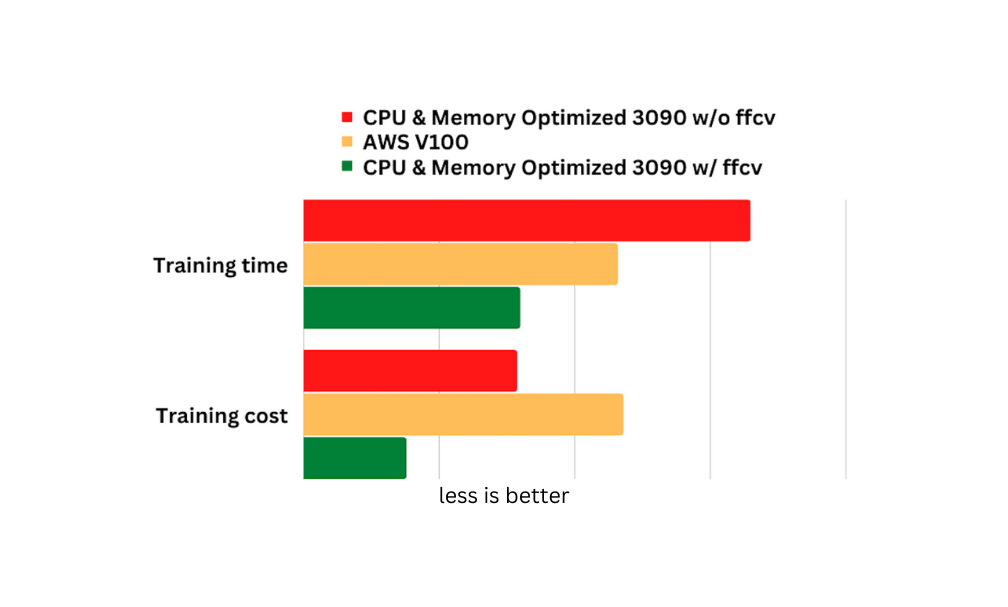

The results published by the FFCV team showed that by integrating the library, they achieved better results faster and less expensive than vanilla PyTorch approaches. We took this into account and began benchmarking tests on Genesis Cloud instances, primarily the newer CPU and memory-optimized RTX 3090 GPU instances to inspect the effects of integrating FFCV into the training algorithm. As an example, we trained a RESNET-9 architecture on a CIFAR-10 dataset at a specified 93.5% accuracy and compared training time and cost between performance of a NVIDIA® GeForce™ RTX 3090 instance provided by Genesis Cloud without and with the integration of FFCV, as well as the comparison of these with the training on a V100 GPU instance provided by AWS.

Benchmarking test on a GC instance + Price Comparison with AWS:

RESNET-9 Architecture on CIFAR-10 Dataset:

| Time (s) | Accuracy (%) | Cost-to-Train - On-demand | |

|---|---|---|---|

| Genesis Cloud RTX 3090 CPU & Memory Optimized instance w/o FFCV | 165 | 93.5 | $0.065 |

| AWS V100 | 116 | 93.5 | $0.098 |

| Genesis Cloud RTX 3090 CPU & Memory Optimized instance w/ FFCV | 80 | 93.5 | $0.031 |

As demonstrated in the table and accompanying graph, the incorporation of FFCV led to a substantial improvement in efficiency. The training time was reduced by over 50%, resulting in a corresponding reduction in cost, from 0.065 to 0.031. Furthermore, when compared to an AWS V100 instance, the CPU and memory-optimized 3090 instances remain a cost-effective option, as the training cost is only one-third that of the V100 instance. This level of performance optimization can have a significant impact on training tasks that would otherwise consume excessive amounts of time and resources.

Conclusion

Continuing the journey to make machine learning accessible to all, this tutorial series article aims to guide the reader through optimizing their data loading pipeline and thus their model training in an efficient and cost-effective manner, particularly when working on Genesis Cloud instances.

Keep accelerating 🚀

The Genesis Cloud team

Never miss out again on Genesis Cloud news and our special deals: follow us on Twitter, LinkedIn, or Reddit.

Sign up for an account with Genesis Cloud here and benefit from $15 in free credits. If you want to find out more, please write to [email protected].

Written on January 23rd , 2023 by Marouane Khoukh